Awesome Neural Network Stuff

Explore how to apply neural networks to physics data analyses Visit a

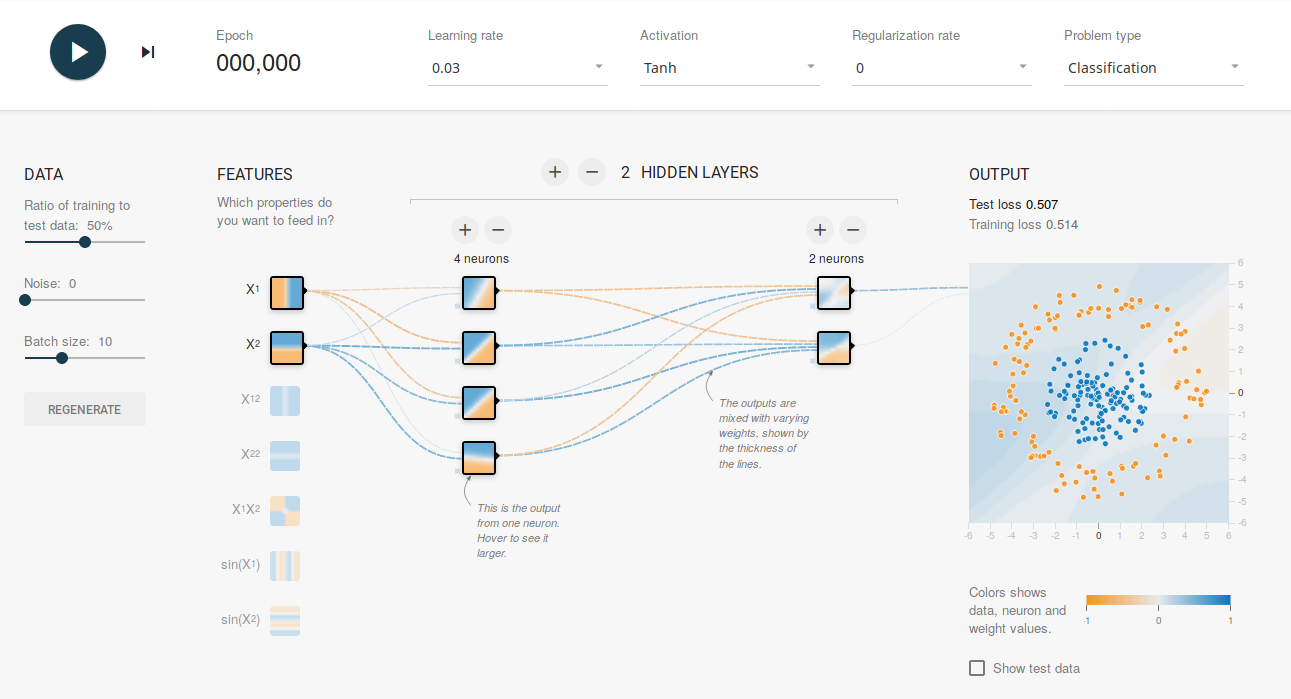

nice neural network playground

Visit a

nice neural network playground

Understanding neural network

reasoning

Understanding neural network

reasoning

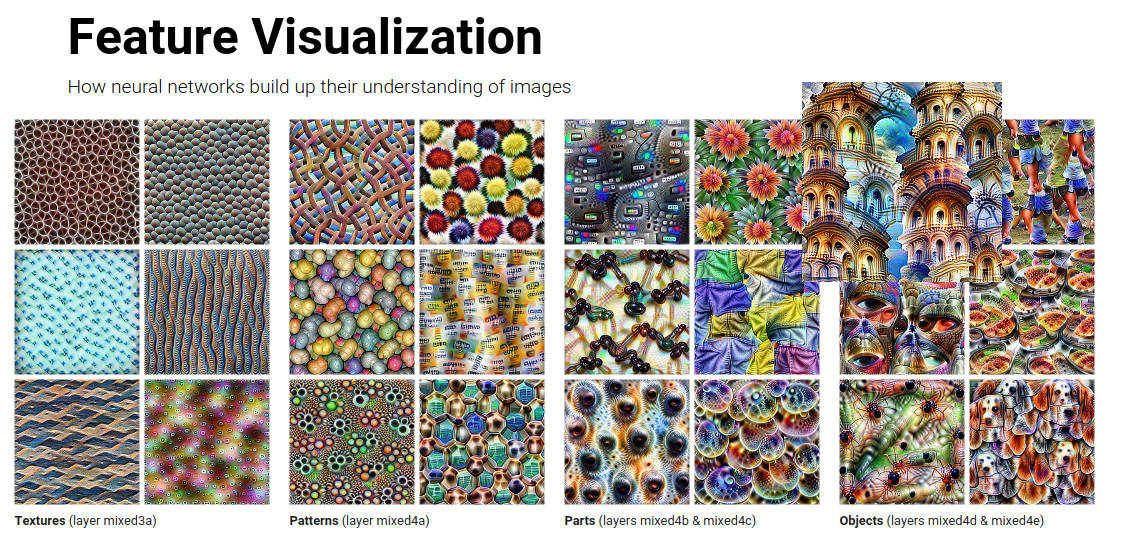

Feature Visualization of neural networks

Feature Visualization of neural networks

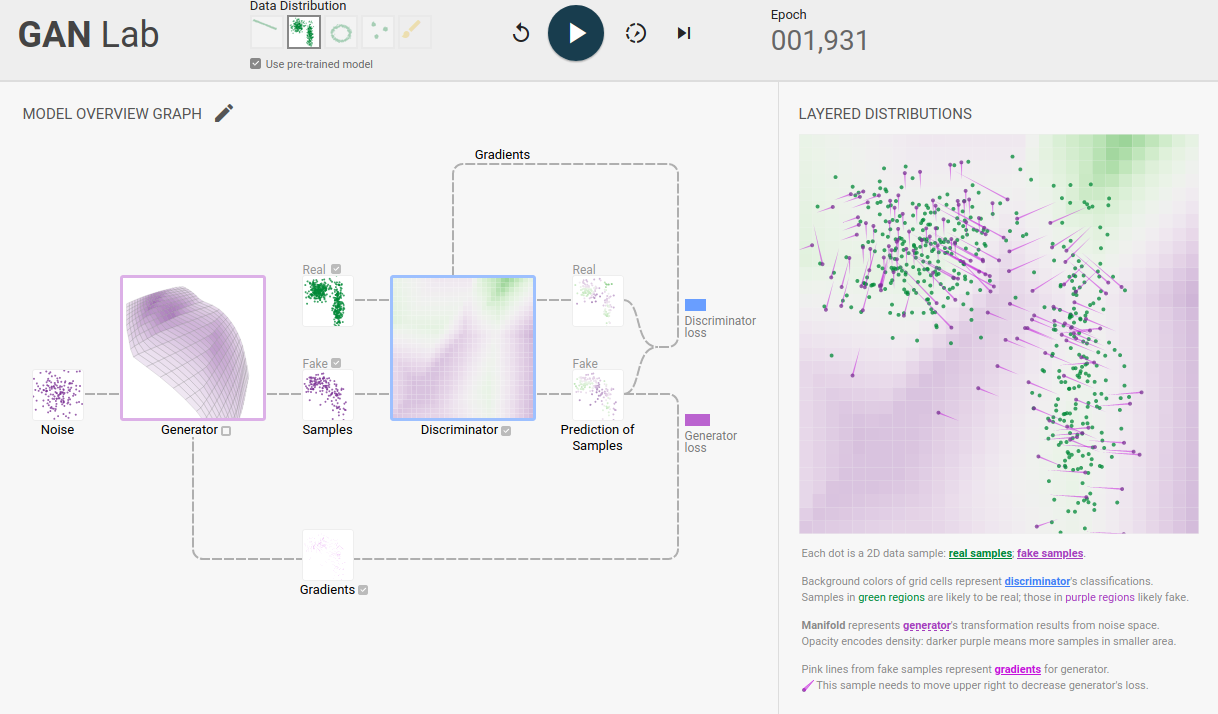

Play

with Generative Adversarial Networks in your browser

Play

with Generative Adversarial Networks in your browser

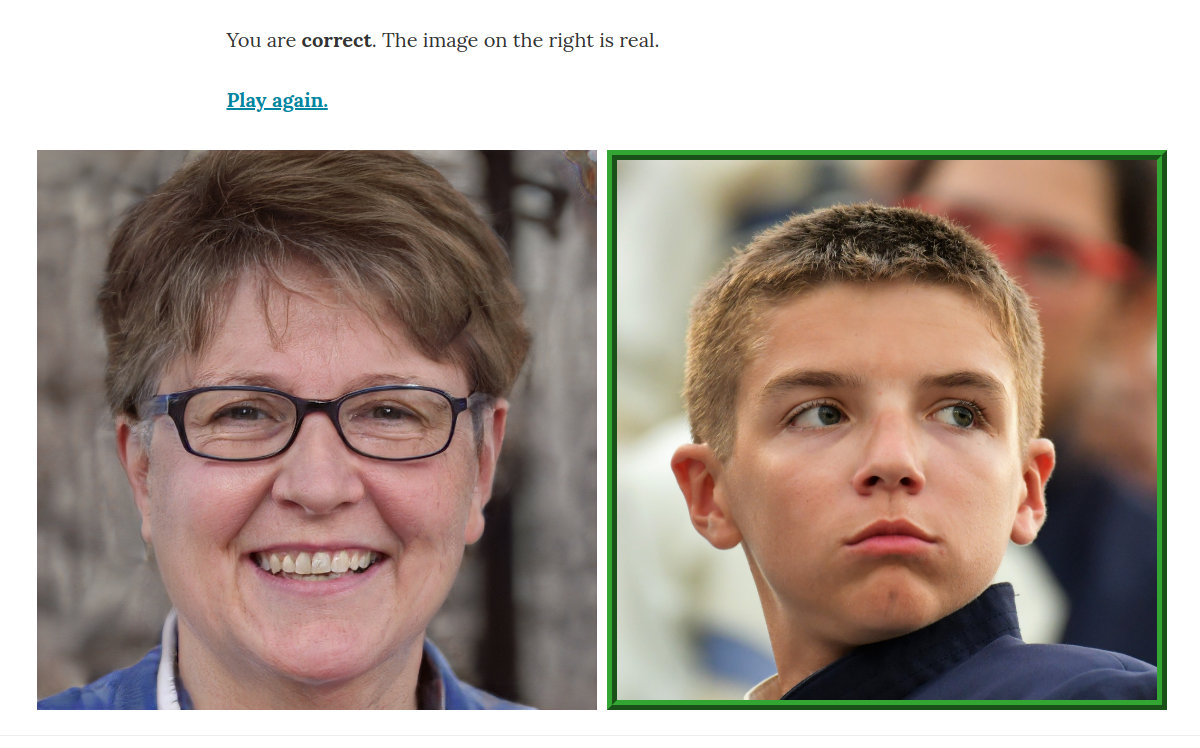

Play "which

face is real"

Play "which

face is real"

Try to identify which images are real photographs and which ones were generated using Generative Adversarial Networks.

News

Read the article "Cosmic Radiation: Breakthrough Thanks to Artificial Intelligence". in the FAU journal, discussing my most recent research published in Physical Review Letters.

Is machine learning causing a paradigm shift in how we analyze data in physics research? I recently co-authored a textbook that addresses this question and state-of-the-art in machine learning to physicists. Feel free to also check out plenty of tutorials demonstrating the application of machine learning to various physics challenges. All tutorials are powered by Keras.

Focus of my research

I am a postdoctoral researcher at the Erlangen Centre for Astroparticle Physics and apply artificial intelligence (AI) to challenges in astroparticle physics. Currently, I am researching machine learning techniques for object reconstruction to enhance the observation capacities of multi-messenger observatories. Additionally, I explore unsupervised learning approaches that can effectively model complex high-dimensional data distributions. These approximations promise to significantly speed up physics simulations, enable instrument optimizations, and model complicated backgrounds, ultimately enhancing analyses with greater precision.

For centuries, astronomers have used telescopes to explore the gigantic universe surrounding us by directly measuring the incoming messenger particles, e.g., visible and infrared light. These measurements become much more complicated at higher energies as the incoming messenger particles induce massive particle cascades when interacting with the atmosphere. The footprints of such cascades can extend over several square kilometers when arriving at the Earth's surface. Large collaborations of hundreds of scientists, engineers, and technicians are operating gigantic experiments observing these particle cascades. The window to the universe at extreme energies and its most radical phenomena can be opened by unveiling the secrets of their measured data.

The capabilities of machine learning offer outstanding opportunities to better reveal the fascinating processes in our universe.

Gamma-ray astronomy

Gamma rays are the highest-energy form of electromagnetic radiation, invisible to the naked eye. They carry detailed information about the universe's most explosive and energetic processes, helping scientists piece together the puzzle of its evolution and composition. The next-generation instruments for observations of the gamma-ray sky are the Southern Wide-field Gamma-Ray Observatory (SWGO). and the Cherenkov Telescope Array (CTA). These two complementary observatories will provide new insights into the non-thermal universe, revolutionizing our understanding of the gamma-ray sky.

Why AI?

Current state-of-the-art AI algorithms rely on deep learning, i.e., machine-learning techniques based on deep neural networks. These models are capable of analyzing and recognizing patterns in vast amounts of data with unprecedented precision and efficiency. These advancements enable to discover increasingly smaller structures within our measurement data. This complexity requires dedicated developments and research of the methods to ensure their validity, robustness, and precision, with the potential to fundamentally change the way physics data is analyzed.

The fascination of astroparticle physics

Astroparticle physics combines the research field of astrophysics, which explores the largest scales we can imagine, our cosmos, with particle physics, researching the tiniest particles in our universe.

The fascination with astroparticle physics lies in its ability to unveil the universe's most elusive secrets using messenger particles like gamma and cosmic rays. They provide a unique lens through which we can study otherwise inaccessible phenomena and investigate fundamental questions in understanding our world.

Observing the extreme Universe: Gamma rays and cosmic rays reveal the ultimate forces in our cosmos. These messengers allow us to image massive stellar explosions, the violent environments around black holes, and the birth of new stars and aid us in understanding the physical processes happening at these exotic places.

Cosmic Accelerators and fundamental physics: These cosmic phenomena serve as natural particle accelerators, capable of producing particles with energies far beyond human-built particle accelerators such as the LHC at CERN. Understanding these processes will unravel and probe the fundamental laws of physics.

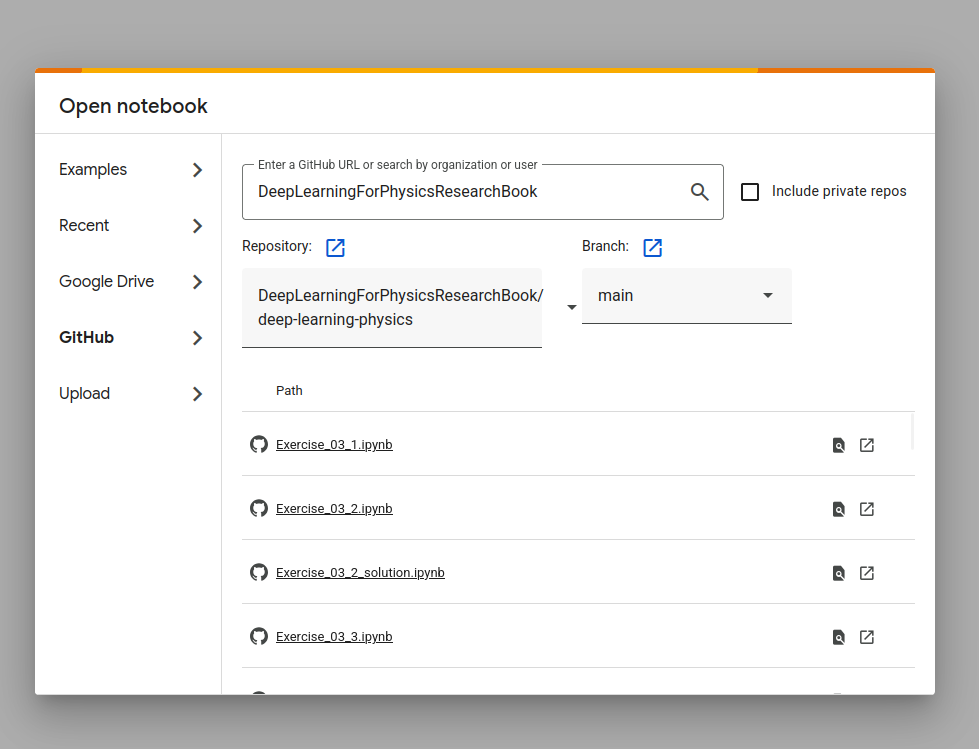

Excited? Apply deep learning in astroparticle physics yourself

Explore the potential of deep learning to improve the sensitivity of astroparticle experiments using deep neural networks. Open the notebooks at google colab to train the networks with GPU support or see the code on GitHub.

Utilize fully-connected networks for event reconstruction....

More tutorials, lectures, and material

Find more tutorials on my:

Or visit the large collection of deep learning applications in physics at https://deeplearningphysics.org/

Or fork the Github repository .

Invited Talks, Lectures, and Conference Contributions

2025

2024

2023

2022

2021

2020

2019

2018

2017

Material of Invited Lectures & Tutorials

Find my slides, lectures, and deep learning tutorials (including notebooks) below. Feel free to checkout the tutorials to train neural networks yourself using the Keras framework.

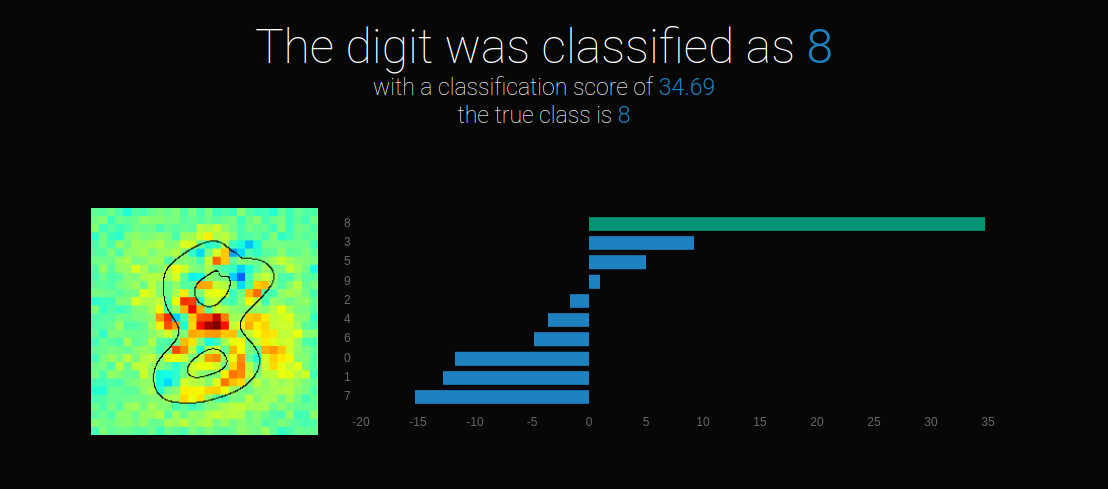

Introspection of Neural Networks

Tutorial about neural network introspection.

Introspection of neural networks is the process of looking inside the black box holding billions of parameters to understand their predictions and reasoning.

The tutorial contains slides about the basic theory on prediction analysis and feature visualization.

Open the basic introduction on google colab to gain a first understanding of DNN reasoning for the recognition of digits.

Generative Models

Generative models are facinating deep learning algorithms designed to generate new data samples that are similar to sampels from a given dataset. By training the models, they learn the underlying patterns and statistics of the data, enabling them to generate novel and realistic instances that resemble the original data distribution. Try out the fast-generation of physics simulation using generative models. Open the tutorials in google colab to train Generative Adversarial Networks (GANs) with GPU support or have a look at the slides.

Familiarize yourself with GANs and learn about fast sample generation....

Learn about advanced generative models (Wasserstein GANs)....

Explore the world of generative models and their application to physics datasets....

Tutorial on GANs and Wasserstein GANs given at the 2nd Terascale School of Machine Learning. Covering the basic theory about the Wasserstein distance and related similarity distances and divergences for high-dimensional spaces.

Graph Convolutional Networks

Tutorial about Graph Convolutional Neural Networks. Tutorial contains slides about basic theory on graphs and convolutions. Convolutions in the spatial and the spectral domain are discussed and explained using code examples. Slides and code are available.

Explore the potential of deep learning to improved the quality of astroparticle experiments by reconstructing cosmic-ray properties using deep neural networks. Tutorial was held at the Paris-Saclay AstroParticle Symposium 2021. Open the notebooks at google colab to train the networks with GPU support or see the code at GitHub.

Utilize convolutional networks (advanced) for the reconstruction....

Introduction to Deep Learning

Tutorial about basic machine and deep learning concepts covering the topics about neural networks, regularization and convolutional networks. Tutorial contains Slides and code. For code see the example section at VISPA .

Lectures

Physics

Water-Cherenkov-based observatories form the high-duty-cycle and wide field of view backbone for observations of the gamma-ray sky at very high energies. In this work, we propose a deep learning application based on graph neural networks (GNNs) for background rejection and energy reconstruction and compare it to state-of-the-art approaches. We find that GNNs outperform hand-designed classification algorithms and observables in background rejection and find an improved energy resolution compared to template-based method.

We present measurements of the atmospheric depth of the shower maximum Xmax, inferred for the first time on an event-by-event level using the Surface Detector of the Pierre Auger Observatory. Using deep learning, we were able to extend measurements of the Xmax distributions up to energies of 100 EeV (10^20 eV), not yet revealed by current measurements, providing new insights into the mass composition of cosmic rays at extreme energies. Gaining a 10-fold increase in statistics compared to the Fluorescence Detector data, we find evidence that the rate of change of the average Xmax with the logarithm of energy features three breaks at 6.5±0.6 (stat)±1 (sys) EeV, 11±2 (stat)±1 (sys) EeV, and 31±5 (stat)±3 (sys) EeV, in the vicinity to the three prominent features (ankle, instep, suppression) of the cosmic-ray flux. The energy evolution of the mean and standard deviation of the measured Xmax distributions indicates that the mass composition becomes increasingly heavier and purer, thus being incompatible with a large fraction of light nuclei between 50 EeV and 100 EeV.

We report an investigation of the mass composition of cosmic rays with energies from 3 to 100 EeV (1 EeV=1018 eV) using the distributions of the depth of shower maximum Xmax. The analysis relies on ∼50,000 events recorded by the Surface Detector of the Pierre Auger Observatory and a deep-learning-based reconstruction algorithm. Above energies of 5 EeV, the data set offers a 10-fold increase in statistics with respect to fluorescence measurements at the Observatory. After cross-calibration using the Fluorescence Detector, this enables the first measurement of the evolution of the mean and the standard deviation of the Xmax distributions up to 100 EeV. Our findings are threefold: (1.) The evolution of the mean logarithmic mass towards a heavier composition with increasing energy can be confirmed and is extended to 100 EeV. (2.) The evolution of the fluctuations of Xmax towards a heavier and purer composition with increasing energy can be confirmed with high statistics. We report a rather heavy composition and small fluctuations in Xmax at the highest energies. (3.) We find indications for a characteristic structure beyond a constant change in the mean logarithmic mass, featuring three breaks that are observed in proximity to the ankle, instep, and suppression features in the energy spectrum.

For the analysis of data taken by Imaging Air Cherenkov Telescopes (IACTs), a large number of air shower simulations are needed to derive the instrument response. The simulations are very complex, involving computational and memory-intensive calculations, and are usually performed repeatedly for different observation intervals to take into account the varying optical sensitivity of the instrument. The use of generative models based on deep neural networks offers the prospect for memory-efficient storing of huge simulation libraries and cost-effective generation of a large number of simulations in an extremely short time. In this work, we use Wasserstein Generative Adversarial Networks to generate photon showers for an IACT equipped with the FlashCam design, which has more than 1,500 pixels. We demonstrate for the first time that the generated images have high fidelity with respect to low-level observables, the Hillas parameters, their physical properties, as well as their correlations. The found increase in generation speed in the order of 10^5 yields promising prospects for fast and memory-efficient simulations of air showers for IACTs.

Imaging Air Cherenkov Telescopes (IACTs) are essential to ground-based observations of gamma rays in the GeV to TeV regime. One particular challenge of ground-based gamma-ray astronomy is an effective rejection of the hadronic background. We propose a new deep-learning-based algorithm for classifying images measured using single or multiple Imaging Air Cherenkov Telescopes by utilizing graph convolutional networks. For images cleaned of the light from the night sky, this allows for an efficient algorithm design that bypasses the challenge of sparse images in CNN-based approachs and find a promising performance with improvements to previous machine-learning and deep-learning-based methods.

A core principle of physics is knowledge gained from data. Thus, deep learning has instantly entered physics and may become a new paradigm in basic and applied research. This textbook addresses physics students and physicists who want to understand what deep learning actually means, and what is the potential for their own scientific projects. Being familiar with linear algebra and parameter optimization is sufficient to jump-start deep learning. Adopting a pragmatic approach, basic and advanced applications in physics research are described. Also offered are simple hands-on exercises for implementing deep networks for which python code and training data can be downloaded (see deeplearningphysics.org).

The present white paper is submitted as part of the “Snowmass” process to help inform the long-term plans of the United States Department of Energy and the National Science Foundation for high-energy physics. It summarizes the science questions driving the Ultra-High-Energy Cosmic-Ray (UHECR) community and provides recommendations on the strategy to answer them in the next two decades.

Ph.D. thesis, RWTH Aachen University, 2021. December 2021

We introduce a collection of datasets from fundamental physics research ‐ including particle physics, astroparticle physics, and hadron- and nuclear physics ‐ for supervised machine learning studies. These datasets, containing hadronic top quarks, cosmic-ray induced air showers, phase transitions in hadronic matter, and generator-level histories, are made public to simplify future work on cross-disciplinary machine learning and transfer learning in fundamental physics. Based on these data, we present a simple yet flexible graph-based neural network architecture that can easily be applied to a wide range of supervised learning tasks in these domains. We show that our approach reaches performance close to state-of-the-art dedicated methods on all datasets. To simplify adaptation for various problems, we provide easy-to-follow instructions on how graph-based representations of data structures, relevant for fundamental physics, can be constructed and provide code implementations for several of them. Implementations are also provided for our proposed method and all reference algorithms.

The atmospheric depth of the air shower maximum Xmax is an observable commonly used for the determination of the nuclear mass composition of ultra-high energy cosmic rays. Direct measurements of Xmax are performed using observations of the longitudinal shower development with fluorescence telescopes. At the same time, several methods have been proposed for an indirect estimation of Xmax from the characteristics of the shower particles registered with surface detector arrays. In this paper, we present a deep neural network (DNN) for the estimation of Xmax. The reconstruction relies on the signals induced by shower particles in the ground based water-Cherenkov detectors of the Pierre Auger Observatory. The network architecture features recurrent long short-term memory layers to process the temporal structure of signals and hexagonal convolutions to exploit the symmetry of the surface detector array. We evaluate the performance of the network using air showers simulated with three different hadronic interaction models. Thereafter, we account for long-term detector effects and calibrate the reconstructed Xmax using fluorescence measurements. Finally, we show that the event-by-event resolution in the reconstruction of the shower maximum improves with increasing shower energy and reaches less than 25 g/cm² at energies above 2×10¹⁹ eV.

We present a new approach for the identification of ultra-high energy cosmic rays from sources using dynamic graph convolutional neural networks. These networks are designed to handle sparsely arranged objects and to exploit their short- and long-range correlations. Our method searches for patterns in the arrival directions of cosmic rays, which are expected to result from coherent deflections in cosmic magnetic fields. The network discriminates astrophysical scenarios with source signatures from those with only isotropically distributed cosmic rays and allows for the identification of cosmic rays that belong to a deflection pattern. We use simulated astrophysical scenarios where the source density is the only free parameter to show how density limits can be derived. We apply this method to a public data set from the AGASA Observatory.

In recent years, great progress has been made in the fields of machine translation, image classification and speech recognition by using deep neural networks and associated techniques. Recently, the astroparticle physics community successfully adapted supervised learning algorithms for a wide range of tasks including background rejection, object reconstruction, track segmentation and the denoising of signals. Additionally, the first approaches towards fast simulations and simulation refinement indicate the huge potential of unsupervised learning for astroparticle physics. We summarize the latest results, discuss the algorithms and challenges and further illustrate the opportunities for the astrophysics community offered by deep learning based algorithms.

The surface-detector array of the Pierre Auger Observatory measures the footprint of air showers induced by ultra-high energy cosmic rays. The reconstruction of event-by-event information sensitive to the cosmic-ray mass, is a challenging task and so far mainly based on fluorescence detector observations with their duty cycle of ≈ 15%. Recently, great progress has been made in multiple fields of machine learning using deep neural networks and associated techniques. Applying these new techniques to air-shower physics opens up possibilities for improved reconstruction, including an estimation of the cosmic-ray composition. In this contribution, we show that deep convolutional neural networks can be used for air-shower reconstruction, using surface-detector data. The focus of the machine learning algorithm is to reconstruct depths of shower maximum. In contrast to traditional reconstruction methods, the algorithm learns to extract the essential information from the signal and arrival-time distributions of the secondary particles. We present the neural-network architecture, describe the training, and assess the performance using simulated air showers.

>> Article on ArXivSimulations of particle showers in calorimeters are computationally time-consuming, as they have to reproduce both energy depositions and their considerable fluctuations. A new approach to ultra-fast simulations are generative models where all calorimeter energy depositions are generated simultaneously. We use GEANT4 simulations of an electron beam impinging on a multi-layer electromagnetic calorimeter for adversarial training of a generator network and a critic network guided by the Wasserstein distance. In most aspects, we observe that the generated calorimeter showers reach the level of showers as simulated with the GEANT4 program.

We use adversarial frameworks together with the Wasserstein distance to generate or refine simulated detector data. The data reflect two-dimensional projections of spatially distributed signal patterns with a broad spectrum of applications. As an example, we use an observatory to detect cosmic ray-induced air showers with a ground-based array of particle detectors. First we investigate a method of generating detector patterns with variable signal strengths while constraining the primary particle energy. We then present a technique to refine simulated time traces of detectors to match corresponding data distributions. With this method we demonstrate that training a deep network with refined data-like signal traces leads to a more precise energy reconstruction of data events compared to training with the originally simulated traces.

We describe a method of reconstructing air showers induced by cosmic rays using deep learning techniques. We simulate an observatory consisting of ground-based particle detectors with fixed locations on a regular grid. The detector’s responses to traversing shower particles are signal amplitudes as a function of time, which provide information on transverse and longitudinal shower properties. In order to take advantage of convolutional network techniques specialized in local pattern recognition, we convert all information to the image-like grid of the detectors. In this way, multiple features, such as arrival times of the first particles and optimized characterizations of time traces, are processed by the network. The reconstruction quality of the cosmic ray arrival direction turns out to be competitive with an analytic reconstruction algorithm. The reconstructed shower direction, energy and shower depth show the expected improvement in resolution for higher cosmic ray energy.

>> See complete publication listDr. Jonas Glombitza

I am a postdoc at the Erlangen Centre for Astroparticle Physics, Germany, after finishing my Ph.D. in physics with summa cum laude in December 2021 at RWTH Aachen University. Currently, I'm investigating machine learning algorithms in the context of particle and astroparticle physics. I am interested in generative models, graph networks, computer vision, and the application of deep-learning techniques in physics analyses. For five years, I have been a lecturer for the deep learning lecture series in physics at RWTH Aachen University and teach machine learning algorithms in international tutorials and workshops. I am a co-author of the textbook Deep Learning for Physics Research, and researching the sensitivity optimization of cosmic-ray instruments using modern algorithms.

As a Pierre Auger Collaboration member, a large part of has been dedicated to the physics of ultra-high energy cosmic rays and their mass composition. Within the collaboration, I have been the leader of the machine learning task. I am a member of the H.E.S.S. experiment, the Cherenkov Telescope Array (CTA), and the Southern Wide-field Gamma-ray Observatory (SWGO), where I am a coordinator of the analysis working group. My research focuses on the development and implementation of novel machine-learning algorithms for improving the performance of gamma-ray observatories in terms of background rejection and event reconstruction.

I am further researching generative models and I am involved in developing methods to accelerate simulations, detect anomalies in huge data sets, and the design of adversarial frameworks in the context of domain adaption to increase the robustness of machine learning algorithms.

I enjoy breaking down and understanding complex things, learning about new topics, and exploring new technologies. I love to teach and understand things in detail to explain them well. I believe in open, reproducible, and international research. In my spare time, I like to go running and play football with friends. In quiet moments, I love to play the piano. I truly enjoy hiking, traveling, and taking pictures of nature and wild animals all over the world.